How Many Watts Does a GPU Use? (Ultimate Power Guide 2025)

A GPU typically uses 30 to 450 watts of power, depending on the model and workload. Entry-level GPUs consume less power, while high-end gaming and professional GPUs use more watts, especially during heavy tasks like gaming or rendering.

In this guide, you will learn the average watt consumption of GPUs and what factors directly affect their real-world power usage.

What Is GPU Power Consumption?

GPU power consumption is the amount of electricity a graphics card consumes to perform tasks such as gaming, rendering, and video processing. It is measured in watts and varies based on GPU model, workload, and performance level.

Why Does GPU Wattage Matter for Your PC?

GPU wattage matters because it decides how much power your graphics card needs to work correctly. It affects your PC stability, performance, and power supply choice. The correct wattage prevents crashes, overheating, and hardware damage while ensuring smooth gaming and tasks.

How Is GPU Power Measured?

GPU power is measured in watts (W) and is usually reported by the manufacturer as TDP (Thermal Design Power), indicating how much power the GPU typically consumes under heavy load.

How Much Power Does a Low-End GPU Use?

Low-end GPUs are designed for basic tasks such as web browsing, office work, and light gaming. These cards are very energy-efficient and often do not need extra power cables from the PSU. They run cool and produce less heat, making them perfect for small PCs and budget systems.

Power usage examples:

| GPU Type | Average Power Use |

| Entry-level GPU | 20W – 75W |

| Office GPUs | 15W – 50W |

| Budget gaming | 50W – 75W |

How Many Watts Does a Mid-Range GPU Use?

Mid-range GPUs are designed for smooth 1080p and 1440p gaming, streaming, and content creation. They balance strong performance with reasonable power use. These cards usually require 1 or 2 PCIe power connectors and work best with high-quality power supplies for stable performance.

| GPU Type | Average Power Use |

| Mid-range gaming | 100W – 180W |

| Performance cards | 180W – 250W |

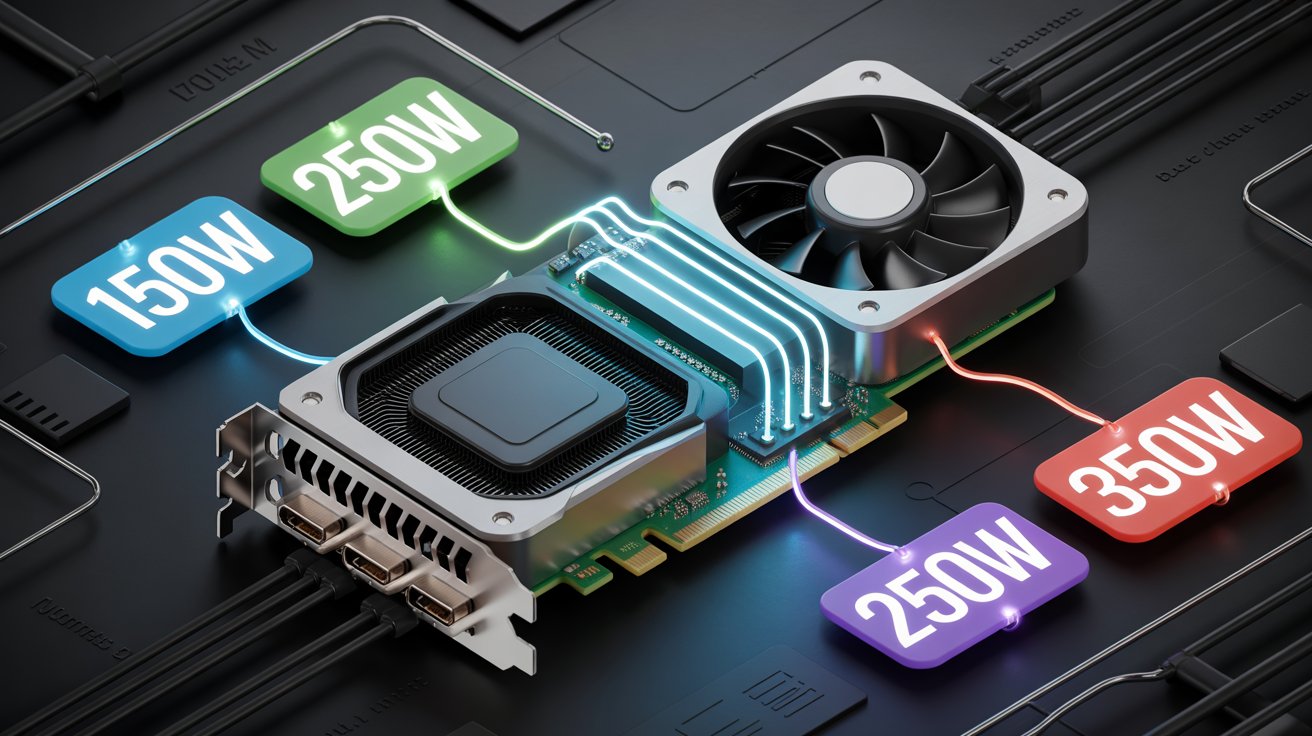

How Much Power Does a High-End GPU Use?

High-end GPUs are built for 4K gaming, ray tracing, AI work, and professional rendering. These cards deliver extreme performance but also draw significant power. They require strong cooling and high-quality PSUs to run safely and efficiently.

| GPU Type | Average Power Use |

| High-end gaming GPU | 250W – 350W |

| Extreme performance | 350W – 450W+ |

What Factors Affect GPU Power Consumption?

GPU power use changes based on how hard the graphics card is working. Simple tasks like browsing use very little power, while gaming or rendering significantly increase watt usage. Better cooling and optimized drivers can help reduce unnecessary power draw and improve efficiency.

- Workload intensity (gaming vs idle)

- Screen resolution and graphics settings

- Clock speed and voltage level

- Cooling system quality

- Driver and software optimization

Also Read: What is NBody Calculation GPU?

GPU Architecture and Design:

The architecture of a GPU decides how smartly it uses power. Modern designs can do more work while using less energy. Older or bulky designs need more electricity and produce more heat.

Key Points:

- Newer architectures move data faster, so the GPU wastes less energy during tasks.

- Energy-efficient cores help the GPU give strong performance without pulling extra power.

- Advanced designs often manage heat more effectively, thereby reducing power consumption.

- Companies like NVIDIA and AMD focus on improving architecture because it directly reduces energy consumption.

Clock Speed and Core Count:

Clock speed shows how fast a GPU operates, and core count shows how many tasks it can handle simultaneously. Both of these increase power use because the GPU needs more energy to stay fast and stable.

- A higher clock speed means the GPU runs more cycles per second, which consumes more electricity.

- More cores give better performance in games and AI tasks, but they also draw extra power.

- When both are high, the GPU generates more heat, and the cooling system consumes more energy.

- Balanced clock settings help reduce power use without losing much performance.

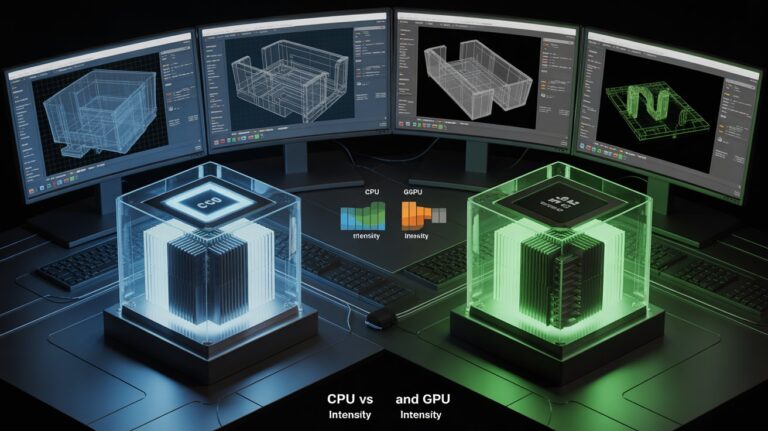

Type of Workload or Tasks:

Different tasks require different levels of GPU power. Heavy workloads make the GPU work harder, increasing electricity consumption.

- Gaming, video editing, and AI tasks push the GPU to high-performance mode, using maximum power.

- Light tasks like browsing or watching videos use very little GPU energy.

- Some software is optimized it use power efficiently, even during heavy workloads.

- Background GPU tasks can also increase power usage without the user noticing.

How Do Clock Speed and Voltage Affect GPU Power?

When a GPU runs at higher clock speeds, it completes more tasks per second, which increases power draw. Voltage also plays a significant role because a higher voltage means more electrical energy is pushed through the GPU. This is why overclocked GPUs always consume more power and require better cooling.

- Higher clock speed = more power usage

- Higher voltage = more heat and energy draw.

- Overclocking increases total watt consumption.

- Undervolting can reduce power without losing much performance.

How Much Power Does a GPU Use for 1080p Gaming?

1080p gaming is not very demanding on modern graphics cards, so power usage stays balanced. Most mid-range GPUs can handle 1080p smoothly without maxing out their watt limits. Power draw rises when you increase graphics settings like ultra textures, shadows, and ray tracing.

| Gaming Type | Power Usage Range |

| eSports titles | 60W – 120W |

| AAA games (High) | 100W – 150W |

| AAA games (Ultra) | 150W – 180W |

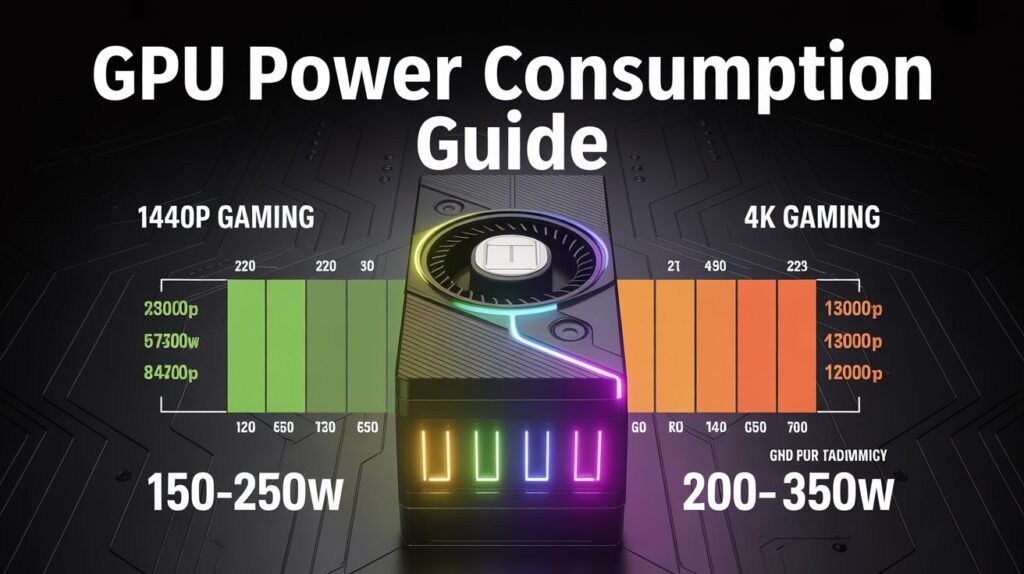

How Many Watts Does a GPU Use for 1440p and 4K Gaming?

Higher resolutions make your GPU work much harder because it has to render more pixels every second. 1440p gaming increases power draw compared to 1080p, while 4K gaming pushes GPUs close to their maximum power limits, especially with ray tracing and ultra settings enabled.

| Resolution | Average Power Use |

| 1440p | 180W – 250W |

| 4K | 250W – 350W+ |

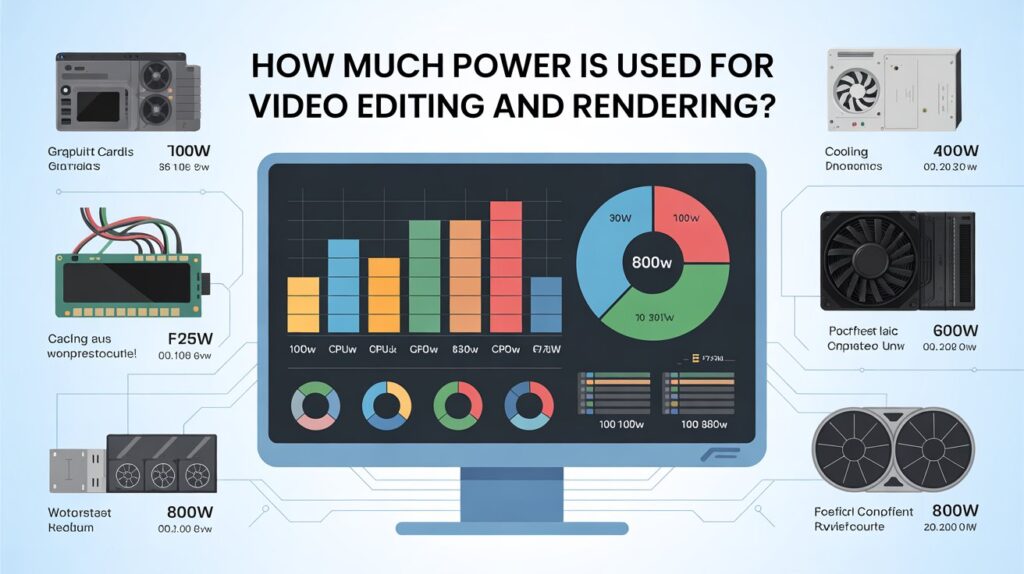

How Much Power Is Used for Video Editing and Rendering?

Video editing software heavily pushes the GPU, especially during effects processing, color grading, and exporting high-resolution videos. Power usage rises during long rendering sessions because the GPU runs at near full load for extended periods. Professional workloads can keep the power draw high for hours.

Also Read: Is BRAW GPU Accelerated?

Typical GPU power usage for creative work:

| Task Type | Power Usage Range |

| Basic video editing | 100W – 180W |

| 4K editing | 180W – 300W |

| Heavy 3D rendering | 250W – 350W+ |

What Is the GPU Wattage Comparison Table?

This table helps users quickly understand how much power different GPU types consume in real-world use. It makes it easier to choose the right graphics card and power supply without confusion. Comparing wattage also helps estimate electricity costs and cooling needs.

GPU wattage comparison table:

| GPU Category | Typical Power Usage |

| Low-end GPU | 20W – 75W |

| Mid-range GPU | 100W – 250W |

| High-end GPU | 250W – 450W+ |

How Does GPU Power Usage Impact the Environment?

GPU power usage affects the environment because GPUs use a lot of electricity. The more electricity they use, the more energy power plants must produce. This leads to more pollution, especially if the electricity comes from fossil fuels.

Key Points:

- Higher electricity demand means more fuel gets burned at power plants, releasing harmful gases.

- More heat produced by GPUs requires extra cooling, which uses more electricity.

- Large data centers with thousands of GPUs emit massive amounts of carbon.

- Using renewable energy can reduce most of the environmental impact.

FAQ’s:

Can a GPU Draw More Power Than Its TDP?

Yes, under heavy load or overclocking, a GPU can exceed its TDP temporarily, drawing more watts and producing extra heat.

Do All Games Consume the Same GPU Power?

No, lightweight games use much less power, while AAA titles with high settings and ray tracing can significantly increase GPU wattage.

Is It Safe to Use a Low-Wattage PSU with a High-End GPU?

No, using an underpowered PSU can cause crashes, instability, or even damage your GPU and other components.

How Much Power Does a GPU Use When Idle?

Most GPUs use between 10W – 50W while idle. Power draw increases only when running intensive tasks or high-resolution workloads.

Does GPU Power Usage Affect Electricity Bills?

Yes, GPUs consuming 250W+ during gaming or rendering can noticeably increase electricity costs over time.

Conclusion:

GPU power usage varies widely, from 20W on entry-level cards to over 450W on high-end models. Factors such as workload, clock speed, architecture, and cooling affect real-world power consumption. Understanding GPU wattage helps you choose the proper power supply, optimize performance, and manage electricity costs while ensuring your system runs efficiently and safely.